Trustworthy AI

Trustworthy AI is a challenging research field that grapples with many unresolved issues, including the safety, fairness, and transparency of AI. We are working on establishing methods to make and validate AI, including large-scale language models, to ensure they are helpful, harmless, and honest. We are also researching how to protect human rights in an AI-powered society.

-

Stress Testing

We are developing a stress test tool to evaluate the ethical aspects of Large Language Models (LLMs), including harmfulness and fairness. The tool is intended to be used for discovering vulnerabilities in LLMs and for Safety Alignment to mitigate harmful outputs.

To proactively search for vulnerabilities and tendencies of LLMs to produce harmful outputs, we are researching Jailbreak methods that induce harmful outputs in LLMs. For instance, we have created a technique that intentionally generates harmful outputs, which are normally to be avoided, by directing the LLM's initial response to a predetermined expression.

-

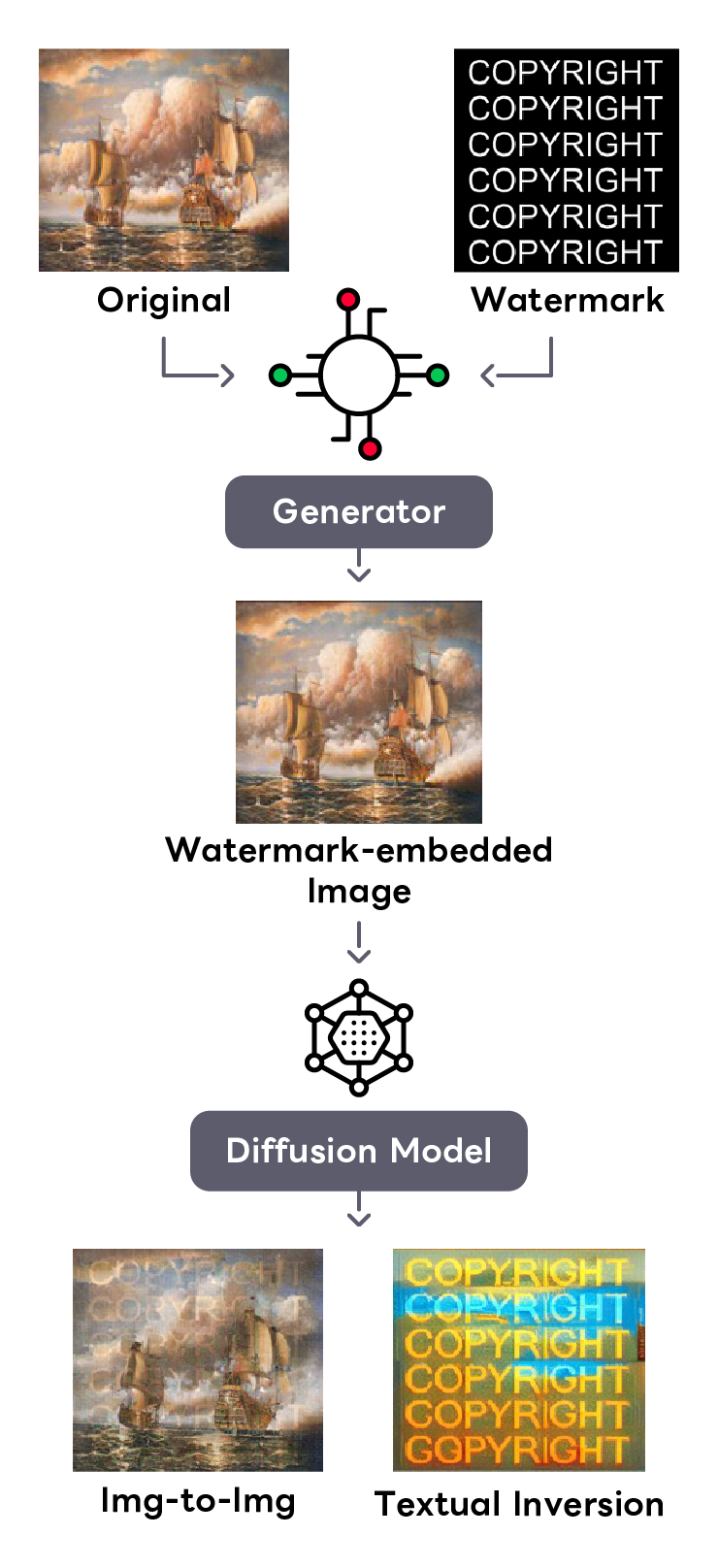

Copyright Protection via Watermark

There is a risk that image generation AI will unintentionally modify and use our copyrighted images without permission. As a countermeasure, we have developed an adversarial "watermark" that emerges when input into diffusion models. We hope this watermark will help prevent unauthorized use of images and assist in asserting copyright.